Faster, More Accurate AI Inference

Drive breakthrough performance with your AI-enabled applications and services.

Inference is where AI delivers results, powering innovation across every industry. AI models are rapidly expanding in size, complexity, and diversity—pushing the boundaries of what’s possible. For the successful use of AI inference, organizations and MLOps engineers need a full-stack approach that supports the end-to-end AI life cycle and tools that enable teams to meet their goals.

Deploy Next-Generation AI Applications With the NVIDIA AI Inference Platform

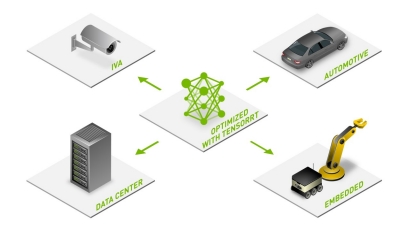

NVIDIA offers an end-to-end stack of products, infrastructure, and services that delivers the performance, efficiency, and responsiveness critical to powering the next generation of AI inference—in the cloud, in the data center, at the network edge, and in embedded devices. It’s designed for MLOps engineers, data scientists, application developers, and software infrastructure engineers with varying levels of AI expertise and experience.

NVIDIA’s full-stack architectural approach ensures that AI-enabled applications deploy with optimal performance, fewer servers, and less power, resulting in faster insights with dramatically lower costs.

NVIDIA AI Enterprise, an enterprise-grade inference platform, includes best-in-class inference software, reliable management, security, and API stability to ensure performance and high availability.

Explore the Benefits

Standardize Deployment

Standardize model deployment across applications, AI frameworks, model architectures, and platforms.

Integrate With Ease

Integrate easily with tools and platforms on public clouds, in on-premises data centers, and at the edge.

Lower Cost

Achieve high throughput and utilization from AI infrastructure, thereby lowering costs.

Scale Seamlessly

Seamlessly scale inference with the application demand.

High Performance

Experience industry-leading performance with the platform that has consistently set multiple records in MLPerf, the leading industry benchmark for AI.

The End-to-End NVIDIA AI Inference Platform

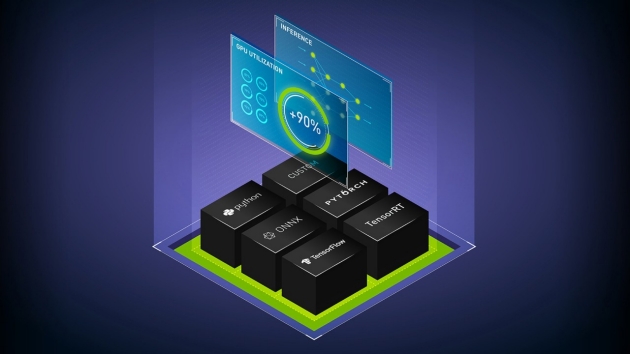

NVIDIA AI Inference Software

NVIDIA AI Enterprise consists of NVIDIA NIM, NVIDIA Triton™ Inference Server, NVIDIA® TensorRT™ and other tools to simplify building, sharing, and deploying AI applications. With enterprise-grade support, stability, manageability, and security, enterprises can accelerate time to value while eliminating unplanned downtime.

The Fastest Path to Generative AI Inference

NVIDIA NIM is easy-to-use software designed to accelerate deployment of generative AI across cloud, data center, and workstation.

Unified Inference Server For All Your AI Workloads

NVIDIA Triton Inference Server is an open-source inference serving software that helps enterprises consolidate bespoke AI model serving infrastructure, shorten the time needed to deploy new AI models in production, and increase AI inferencing and prediction capacity.

An SDK for Optimizing Inference and Runtime

NVIDIA TensorRT delivers low latency and high throughput for high-performance inference. It includes NVIDIA TensorRT-LLM, an open-source library and Python API for defining, optimizing, and executing large language models (LLMs) for inference.

NVIDIA AI Inference Infrastructure

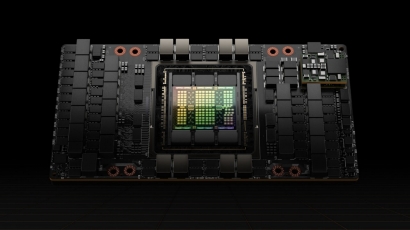

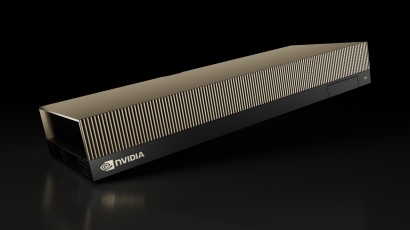

NVIDIA H100 Tensor Core GPU

H100 delivers the next massive leap in NVIDIA’s accelerated compute data center platform, securely accelerating diverse workloads from small enterprise workloads to exascale HPC and trillion-parameter AI in every data center.

NVIDIA L40S GPU

Combining NVIDIA’s full stack of inference serving software with the L40S GPU provides a powerful platform for trained models ready for inference. With support for structural sparsity and a broad range of precisions, the L40S delivers up to 1.7X the inference performance of the NVIDIA A100 Tensor Core GPU.

NVIDIA L4 GPU

L4 cost-effectively delivers universal, energy-efficient acceleration for video, AI, visual computing, graphics, virtualization, and more. The GPU delivers 120X higher AI video performance than CPU-based solutions, letting enterprises gain real-time insights to personalize content, improve search relevance, and more.

MacBook

MacBook iPad

iPad Apple Watch

Apple Watch Airpods

Airpods iMac

iMac Studio Display

Studio Display iphone

iphone

Gaming Laptop

Gaming Laptop

Gaming Desktop

Gaming Desktop

DDR5 Desktop

DDR5 Desktop DDR5 Laptop

DDR5 Laptop DDR5 Server

DDR5 Server DDR4 Desktop

DDR4 Desktop DDR4 Laptop

DDR4 Laptop DDR4 Server

DDR4 Server DDR3 Desktop

DDR3 Desktop DDR3 Laptop

DDR3 Laptop DDR3 Server

DDR3 Server

Intel Socket

Intel Socket Intel Z890

Intel Z890 Intel B860

Intel B860 Intel B760

Intel B760 Intel H770

Intel H770 Intel B660

Intel B660 Intel H670

Intel H670 Intel H610

Intel H610 Intel Z690

Intel Z690 Intel H510

Intel H510 Intel Z590

Intel Z590 Intel B560

Intel B560 Intel H470

Intel H470 Intel Z490

Intel Z490 Intel H410

Intel H410 Intel B460

Intel B460 Intel H310

Intel H310 Intel B360

Intel B360 Intel B365

Intel B365 Intel X299

Intel X299 Intel Z390

Intel Z390 Intel Z370

Intel Z370 Intel H370

Intel H370 Intel Z270 H270

Intel Z270 H270 Intel B250

Intel B250 Intel Z170 H170

Intel Z170 H170 Intel H110

Intel H110 Intel H81

Intel H81 Intel B85

Intel B85 Intel H61

Intel H61 Intel B150

Intel B150 AMD Socket

AMD Socket AMD B850

AMD B850 AMD B840

AMD B840 AMD TRX50

AMD TRX50 AMD A620

AMD A620 AMD X870

AMD X870 AMD B650

AMD B650 AMD A520

AMD A520 AMD TRX40

AMD TRX40 AMD B550

AMD B550 AMD X570

AMD X570 AMD X470

AMD X470 AMD B450

AMD B450 AMD X370

AMD X370 AMD A320

AMD A320 AMD B350

AMD B350 AMD X399

AMD X399 AMD A88

AMD A88 AMD A68 A78

AMD A68 A78

Cpu Air Coolers

Cpu Air Coolers CPU Liquid Coolers

CPU Liquid Coolers Fans

Fans

AMD CPUs Desktop

AMD CPUs Desktop AMD Server CPU

AMD Server CPU Intel Server CPU

Intel Server CPU Samsung CPUs

Samsung CPUs Other special CPUs

Other special CPUs

Solid State Drives

Solid State Drives NVMe PCIe M.2

NVMe PCIe M.2 SATA 2.5inch

SATA 2.5inch Hard Disk Drive

Hard Disk Drive Server Hard Drives

Server Hard Drives NAS hard drive

NAS hard drive Monitoring hard drive

Monitoring hard drive Portable Solid State Drives

Portable Solid State Drives Memory Cards

Memory Cards USB Flash Drives

USB Flash Drives

Nvidia GPU

Nvidia GPU RTX 50 series

RTX 50 series RTX 30 series

RTX 30 series GTX 16 series

GTX 16 series GTX 10 series

GTX 10 series RX 9000 series

RX 9000 series RX 7000 series

RX 7000 series RX 6000 series

RX 6000 series RX 5000 series

RX 5000 series RX 500 series

RX 500 series RTX 20 series

RTX 20 series

Rack server

Rack server Blade server

Blade server Tower server

Tower server Storage Server Solutions

Storage Server Solutions Network switch

Network switch

Workstation

Workstation Mobile Workstation

Mobile Workstation

Server motherboard

Server motherboard Workstation Motherboard

Workstation Motherboard

PSP3000索尼原装街机掌机-150x150.jpg) SONY Gaming Console

SONY Gaming Console ASUS Gaming Console

ASUS Gaming ConsoleLegion-Go-游戏掌机手持设备-150x150.jpg) Lenovo Gaming Console

Lenovo Gaming Console One XPlayer

One XPlayer日版-Xbox-Series-X-XSX次世代-150x150.jpg) Microsoft Gaming Console

Microsoft Gaming Console XBOX Gaming Console

XBOX Gaming ConsoleMSI-Claw-A1M-050US-游戏掌机-150x150.jpg) MSI Gaming Console

MSI Gaming Console

Motherboard

Motherboard GTX TITAN

GTX TITAN Computer Cases

Computer Cases