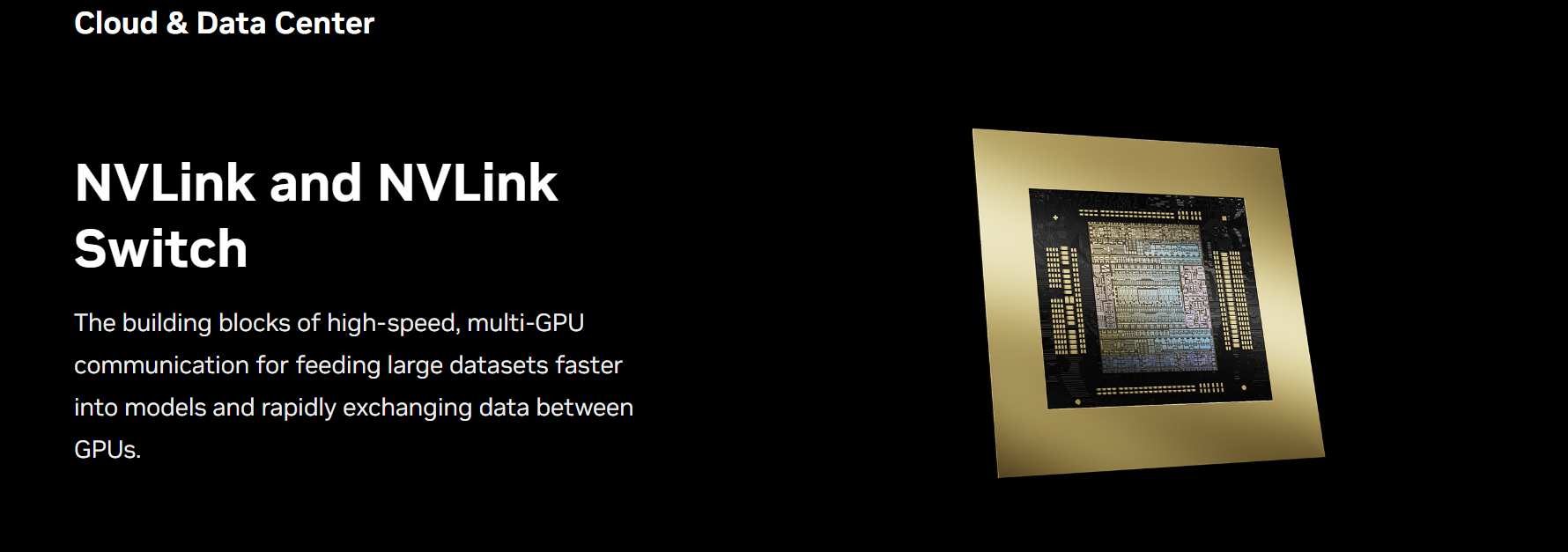

A Need for Faster Scale-Up Interconnects

Unlocking the full potential of exascale computing and trillion-parameter AI models hinges on swift, seamless communication between every GPU within a server cluster. The fifth generation of NVIDIA® NVLink® is a scale–up interconnect that unleashes accelerated performance for trillion- and multi-trillion parameter AI models.

Maximize System Throughput with NVIDIA NVLink

Fifth-generation NVLink vastly improves scalability for larger multi-GPU systems. A single NVIDIA Blackwell Tensor Core GPU supports up to 18 NVLink 100 gigabyte-per-second (GB/s) connections for a total bandwidth of 1.8 terabytes per second (TB/s)—2X more bandwidth than the previous generation and over 14X the bandwidth of PCIe Gen5. Server platforms like the GB200 NVL72 take advantage of this technology to deliver greater scalability for today’s most complex large models.

NVLink Performance

NVLink in NVIDIA H100 increases inter-GPU communication bandwidth 1.5X compared to the previous generation, so researchers can use larger, more sophisticated applications to solve more complex problems.

Raise GPU Throughput With NVLink Communications

Fully Connect GPUs With NVIDIA NVLink and NVLink Switch

NVLink is a 1.8TB/s bidirectional, direct GPU-to-GPU interconnect that scales multi-GPU input and output (IO) within a server. The NVIDIA NVLink Switch chips connect multiple NVLinks to provide all-to-all GPU communication at full NVLink speed within a single rack and between racks.

To enable high-speed, collective operations, each NVLink Switch has engines for NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ for in-network reductions and multicast acceleration.

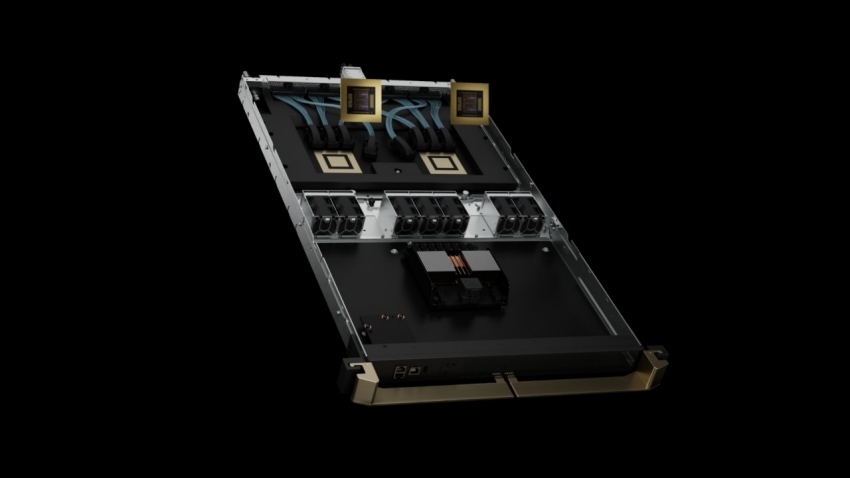

Train Multi-Trillion Parameter Models With NVLink Switch System

With NVLink Switch, NVLink connections can be extended across nodes to create a seamless, high-bandwidth, multi-node GPU cluster—effectively forming a data center-sized GPU. NVIDIA NVLink Switch enables 130TB/s of GPU bandwidth in one NVL72 for large model parallelism. Multi-server clusters with NVLink scale GPU communications in balance with the increased computing, so NVL72 can support 9X the GPU count than a single eight-GPU system.

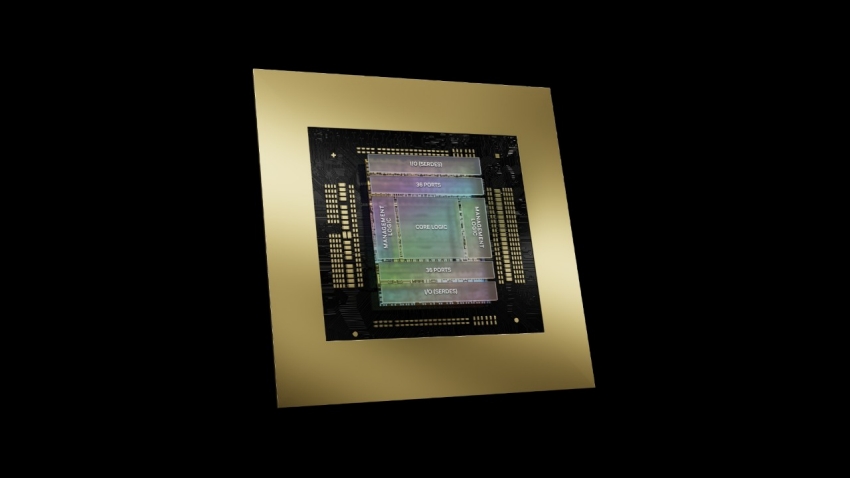

NVIDIA NVLink Switch

The NVIDIA NVLink Switch features 144 NVLink ports with a non-blocking switching capacity of 14.4TB/s. The rack switch is designed to provide high bandwidth and low latency in NVIDIA GB200 NVL72 systems supporting external fifth-generation NVLink connectivity.

Scaling From Enterprise to Exascale

Full Connection for Unparalleled Performance

The NVLink Switch is the first rack-level switch chip capable of supporting up to 576 fully connected GPUs in a non-blocking compute fabric. The NVLink Switch interconnects every GPU pair at an incredible 1,800GB/s. It supports full all-to-all communication. The 72 GPUs in GB200 NVL72 can be used as a single high-performance accelerator with up to 1.4 exaFLOPS of AI compute power.

The Most Powerful AI and HPC Platform

NVLink and NVLink Switch are essential building blocks of the complete NVIDIA data center solution that incorporates hardware, networking, software, libraries, and optimized AI models and applications from the NVIDIA AI Enterprise software suite and the NVIDIA NGC™ catalog. The most powerful end-to-end AI and HPC platform, it allows researchers to deliver real-world results and deploy solutions into production, driving unprecedented acceleration at every scale.

Specifications

- NVLink

Second Generation | Third Generation | Fourth Generation | Fifth Generation | |

| NVLink bandwidth per GPU | 300GB/s | 600GB/s | 900GB/s | 1,800GB/s |

| Maximum Number of Links per GPU | 6 | 12 | 18 | 18 |

| Supported NVIDIA Architectures | NVIDIA Volta™ architecture | NVIDIA Ampere architecture | NVIDIA Hopper™ architecture | NVIDIA Blackwell architecture |

| First Generation | Second Generation | Third Generation | NVLink Switch |

| Number of GPUs with direct connection within a NVLink domain | Up to 8 | Up to 8 | Up to 8 | Up to 576 |

| NVSwitch GPU-to-GPU bandwidth | 300GB/s | 600GB/s | 900GB/s | 1,800GB/s |

| Total aggregate bandwidth | 2.4TB/s | 4.8TB/s | 7.2TB/s | 1PB/s |

| Supported NVIDIA architectures | NVIDIA Volta™ architecture | NVIDIA Ampere architecture | NVIDIA Hopper™ architecture | NVIDIA Blackwell architecture |

Preliminary specifications; may be subject to change.

MacBook

MacBook iPad

iPad Apple Watch

Apple Watch Airpods

Airpods iMac

iMac Studio Display

Studio Display iphone

iphone

Gaming Laptop

Gaming Laptop

Gaming Desktop

Gaming Desktop

DDR5 Desktop

DDR5 Desktop DDR5 Laptop

DDR5 Laptop DDR5 Server

DDR5 Server DDR4 Desktop

DDR4 Desktop DDR4 Laptop

DDR4 Laptop DDR4 Server

DDR4 Server DDR3 Desktop

DDR3 Desktop DDR3 Laptop

DDR3 Laptop DDR3 Server

DDR3 Server

Intel Socket

Intel Socket Intel B760

Intel B760 Intel H770

Intel H770 Intel B660

Intel B660 Intel H670

Intel H670 Intel H610

Intel H610 Intel Z690

Intel Z690 Intel H510

Intel H510 Intel Z590

Intel Z590 Intel B560

Intel B560 Intel H470

Intel H470 Intel Z490

Intel Z490 Intel H410

Intel H410 Intel B460

Intel B460 Intel H310

Intel H310 Intel B360

Intel B360 Intel B365

Intel B365 Intel X299

Intel X299 Intel Z390

Intel Z390 Intel Z370

Intel Z370 Intel H370

Intel H370 Intel Z270 H270

Intel Z270 H270 Intel B250

Intel B250 Intel Z170 H170

Intel Z170 H170 Intel H110

Intel H110 Intel H81

Intel H81 Intel B85

Intel B85 Intel H61

Intel H61 Intel B150

Intel B150 AMD Socket

AMD Socket AMD TRX50

AMD TRX50 AMD A620

AMD A620 AMD B650

AMD B650 AMD A520

AMD A520 AMD TRX40

AMD TRX40 AMD B550

AMD B550 AMD X570

AMD X570 AMD X470

AMD X470 AMD B450

AMD B450 AMD X370

AMD X370 AMD A320

AMD A320 AMD B350

AMD B350 AMD X399

AMD X399 AMD A88

AMD A88 AMD A68 A78

AMD A68 A78

Cpu Air Coolers

Cpu Air Coolers CPU Liquid Coolers

CPU Liquid Coolers Fans

Fans

AMD CPUs Desktop

AMD CPUs Desktop AMD Server CPU

AMD Server CPU Intel Server CPU

Intel Server CPU Samsung CPUs

Samsung CPUs Other special CPUs

Other special CPUs

Solid State Drives

Solid State Drives NVMe PCIe M.2

NVMe PCIe M.2 SATA 2.5inch

SATA 2.5inch Hard Disk Drive

Hard Disk Drive Server Hard Drives

Server Hard Drives NAS hard drive

NAS hard drive Monitoring hard drive

Monitoring hard drive Portable Solid State Drives

Portable Solid State Drives Memory Cards

Memory Cards USB Flash Drives

USB Flash Drives

Nvidia GPU

Nvidia GPU RTX 50 series

RTX 50 series RTX 30 series

RTX 30 series GTX 16 series

GTX 16 series GTX 10 series

GTX 10 series RX 7000 series

RX 7000 series RX 6000 series

RX 6000 series RX 5000 series

RX 5000 series RX 500 series

RX 500 series RTX 20 series

RTX 20 series

Rack server

Rack server Blade server

Blade server Tower server

Tower server Storage Server Solutions

Storage Server Solutions Network switch

Network switch

Workstation

Workstation Mobile Workstation

Mobile Workstation

Server motherboard

Server motherboard Workstation Motherboard

Workstation Motherboard

PSP3000索尼原装街机掌机-150x150.jpg) SONY Gaming Console

SONY Gaming Console ASUS Gaming Console

ASUS Gaming ConsoleLegion-Go-游戏掌机手持设备-150x150.jpg) Lenovo Gaming Console

Lenovo Gaming Console日版-Xbox-Series-X-XSX次世代-150x150.jpg) Microsoft Gaming Console

Microsoft Gaming Console XBOX Gaming Console

XBOX Gaming ConsoleMSI-Claw-A1M-050US-游戏掌机-150x150.jpg) MSI Gaming Console

MSI Gaming Console

Motherboard

Motherboard GTX TITAN

GTX TITAN Computer Cases

Computer Cases