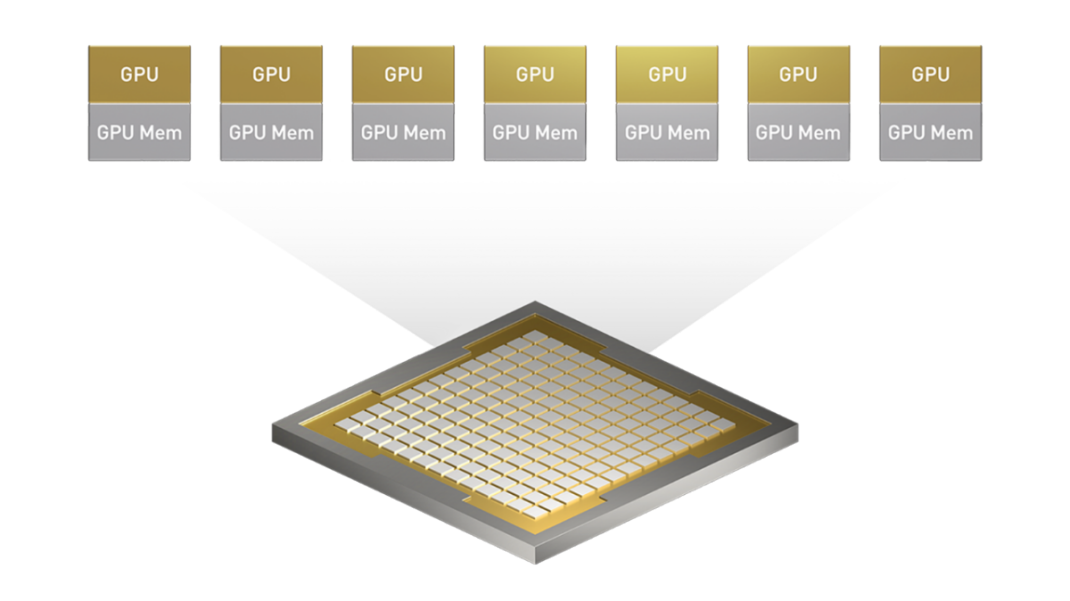

NVIDIA Multi-Instance GPU

Seven independent instances in a single GPU.

Multi-Instance GPU (MIG) expands the performance and value of NVIDIA Blackwell and Hopper™ generation GPUs. MIG can partition the GPU into as many as seven instances, each fully isolated with its own high-bandwidth memory, cache, and compute cores. This gives administrators the ability to support every workload, from the smallest to the largest, with guaranteed quality of service (QoS) and extending the reach of accelerated computing resources to every user.

Benefits Overview

Expand GPU Access

With MIG, you can achieve up to 7X more GPU resources on a single GPU. MIG gives researchers and developers more resources and flexibility than ever before.

Optimize GPU Utilization

MIG provides the flexibility to choose many different instance sizes, which allows provisioning of the right-sized GPU instance for each workload, ultimately optimizing utilization and maximizing data center investment.

Run Simultaneous Workloads

MIG enables inference, training, and high-performance computing (HPC) workloads to run at the same time on a single GPU with deterministic latency and throughput. Unlike time slicing, each workload runs in parallel, delivering higher performance.

How the Technology Works

Without MIG, different jobs running on the same GPU, such as different AI inference requests, compete for the same resources. A job consuming larger memory bandwidth starves others, resulting in several jobs missing their latency targets. With MIG, jobs run simultaneously on different instances, each with dedicated resources for compute, memory, and memory bandwidth, resulting in predictable performance with QoS and maximum GPU utilization.

Provision and Configure Instances as Needed

Run Workloads in Parallel, Securely

MIG in Blackwell GPUs

Blackwell and Hopper GPUs support MIG with multi-tenant, multi-user configurations in virtualized environments across up to seven GPU instances, securely isolating each instance with confidential computing at the hardware and hypervisor level. Dedicated video decoders for each MIG instance deliver secure, high-throughput intelligent video analytics (IVA) on shared infrastructure. With concurrent MIG profiling, administrators can monitor right-sized GPU acceleration and allocate resources for multiple users.

For researchers with smaller workloads, rather than renting a full cloud instance, they can use MIG to isolate a portion of a GPU securely while being assured that their data is secure at rest, in transit, and in use. This improves flexibility for cloud service providers to price and address smaller customer opportunities.

Built for IT and DevOps

MIG enables fine-grained GPU provisioning by IT and DevOps teams. Each MIG instance behaves like a standalone GPU to applications, so there’s no change to the CUDA® platform. MIG can be used in all major enterprise computing environments.

Deploy from Data Center to Edge

Deploy from Data Center to Edge Use MIG on premises, in the cloud, and at the edge.

Leverage Containers

Run containerized applications on MIG instances.

Support Kubernetes

Schedule Kubernetes pods on MIG instances.

Virtualize Applications

Run applications on MIG instances inside a virtual machine.

MIG Specifications

| GB200/B200/B100 | H100 | |

|---|---|---|

| Confidential computing | Yes | Yes |

| Instance types | Up to 7x 23GB Up to 4x 45GB Up to 2x 95GB Up to 1x 192GB | 7x 10GB 4x 20GB 2x 40GB 1x 80GB |

| GPU profiling and monitoring | Concurrently on all instances | Concurrently on all instances |

| Secure Tenants | 7x | 7x |

| Media decoders | Dedicated NVJPEG and NVDEC per instance | Dedicated NVJPEG and NVDEC per instance |

Preliminary specifications, may be subject to change

MacBook

MacBook iPad

iPad Apple Watch

Apple Watch Airpods

Airpods iMac

iMac Studio Display

Studio Display iphone

iphone

Gaming Laptop

Gaming Laptop

Gaming Desktop

Gaming Desktop

DDR5 Desktop

DDR5 Desktop DDR5 Laptop

DDR5 Laptop DDR5 Server

DDR5 Server DDR4 Desktop

DDR4 Desktop DDR4 Laptop

DDR4 Laptop DDR4 Server

DDR4 Server DDR3 Desktop

DDR3 Desktop DDR3 Laptop

DDR3 Laptop DDR3 Server

DDR3 Server

Intel Socket

Intel Socket Intel B760

Intel B760 Intel H770

Intel H770 Intel B660

Intel B660 Intel H670

Intel H670 Intel H610

Intel H610 Intel Z690

Intel Z690 Intel H510

Intel H510 Intel Z590

Intel Z590 Intel B560

Intel B560 Intel H470

Intel H470 Intel Z490

Intel Z490 Intel H410

Intel H410 Intel B460

Intel B460 Intel H310

Intel H310 Intel B360

Intel B360 Intel B365

Intel B365 Intel X299

Intel X299 Intel Z390

Intel Z390 Intel Z370

Intel Z370 Intel H370

Intel H370 Intel Z270 H270

Intel Z270 H270 Intel B250

Intel B250 Intel Z170 H170

Intel Z170 H170 Intel H110

Intel H110 Intel H81

Intel H81 Intel B85

Intel B85 Intel H61

Intel H61 Intel B150

Intel B150 AMD Socket

AMD Socket AMD TRX50

AMD TRX50 AMD A620

AMD A620 AMD B650

AMD B650 AMD A520

AMD A520 AMD TRX40

AMD TRX40 AMD B550

AMD B550 AMD X570

AMD X570 AMD X470

AMD X470 AMD B450

AMD B450 AMD X370

AMD X370 AMD A320

AMD A320 AMD B350

AMD B350 AMD X399

AMD X399 AMD A88

AMD A88 AMD A68 A78

AMD A68 A78

Cpu Air Coolers

Cpu Air Coolers CPU Liquid Coolers

CPU Liquid Coolers Fans

Fans

AMD CPUs Desktop

AMD CPUs Desktop AMD Server CPU

AMD Server CPU Intel Server CPU

Intel Server CPU Samsung CPUs

Samsung CPUs Other special CPUs

Other special CPUs

Solid State Drives

Solid State Drives NVMe PCIe M.2

NVMe PCIe M.2 SATA 2.5inch

SATA 2.5inch Hard Disk Drive

Hard Disk Drive Server Hard Drives

Server Hard Drives NAS hard drive

NAS hard drive Monitoring hard drive

Monitoring hard drive Portable Solid State Drives

Portable Solid State Drives Memory Cards

Memory Cards USB Flash Drives

USB Flash Drives

Nvidia GPU

Nvidia GPU RTX 50 series

RTX 50 series RTX 30 series

RTX 30 series GTX 16 series

GTX 16 series GTX 10 series

GTX 10 series RX 7000 series

RX 7000 series RX 6000 series

RX 6000 series RX 5000 series

RX 5000 series RX 500 series

RX 500 series RTX 20 series

RTX 20 series

Rack server

Rack server Blade server

Blade server Tower server

Tower server Storage Server Solutions

Storage Server Solutions Network switch

Network switch

Workstation

Workstation Mobile Workstation

Mobile Workstation

Server motherboard

Server motherboard Workstation Motherboard

Workstation Motherboard

PSP3000索尼原装街机掌机-150x150.jpg) SONY Gaming Console

SONY Gaming Console ASUS Gaming Console

ASUS Gaming ConsoleLegion-Go-游戏掌机手持设备-150x150.jpg) Lenovo Gaming Console

Lenovo Gaming Console日版-Xbox-Series-X-XSX次世代-150x150.jpg) Microsoft Gaming Console

Microsoft Gaming Console XBOX Gaming Console

XBOX Gaming ConsoleMSI-Claw-A1M-050US-游戏掌机-150x150.jpg) MSI Gaming Console

MSI Gaming Console

Motherboard

Motherboard GTX TITAN

GTX TITAN Computer Cases

Computer Cases