The NVIDIA GB200 NVL72 is an exascale computer in a single rack. With 36 GB200s interconnected by the largest NVIDIA® NVLink® domain ever offered, NVLink Switch System provides 130 terabytes per second (TB/s) of low-latency GPU communications for AI and high-performance computing (HPC) workloads.

NVIDIA GB200 NVL72

Powering the new era of computing.

Unlocking Real-Time Trillion-Parameter Models

GB200 NVL72 connects 36 Grace CPUs and 72 Blackwell GPUs in a rack-scale design. The GB200 NVL72 is a liquid-cooled, rack-scale solution that boasts a 72-GPU NVLink domain that acts as a single massive GPU and delivers 30X faster real-time trillion-parameter LLM inference.

The GB200 Grace Blackwell Superchip is a key component of the NVIDIA GB200 NVL72, connecting two high-performance NVIDIA Blackwell Tensor Core GPUs and an NVIDIA Grace CPU using the NVIDIA® NVLink®-C2C interconnect to the two Blackwell GPUs.

Supercharging Next-Generation AI and Accelerated Computing

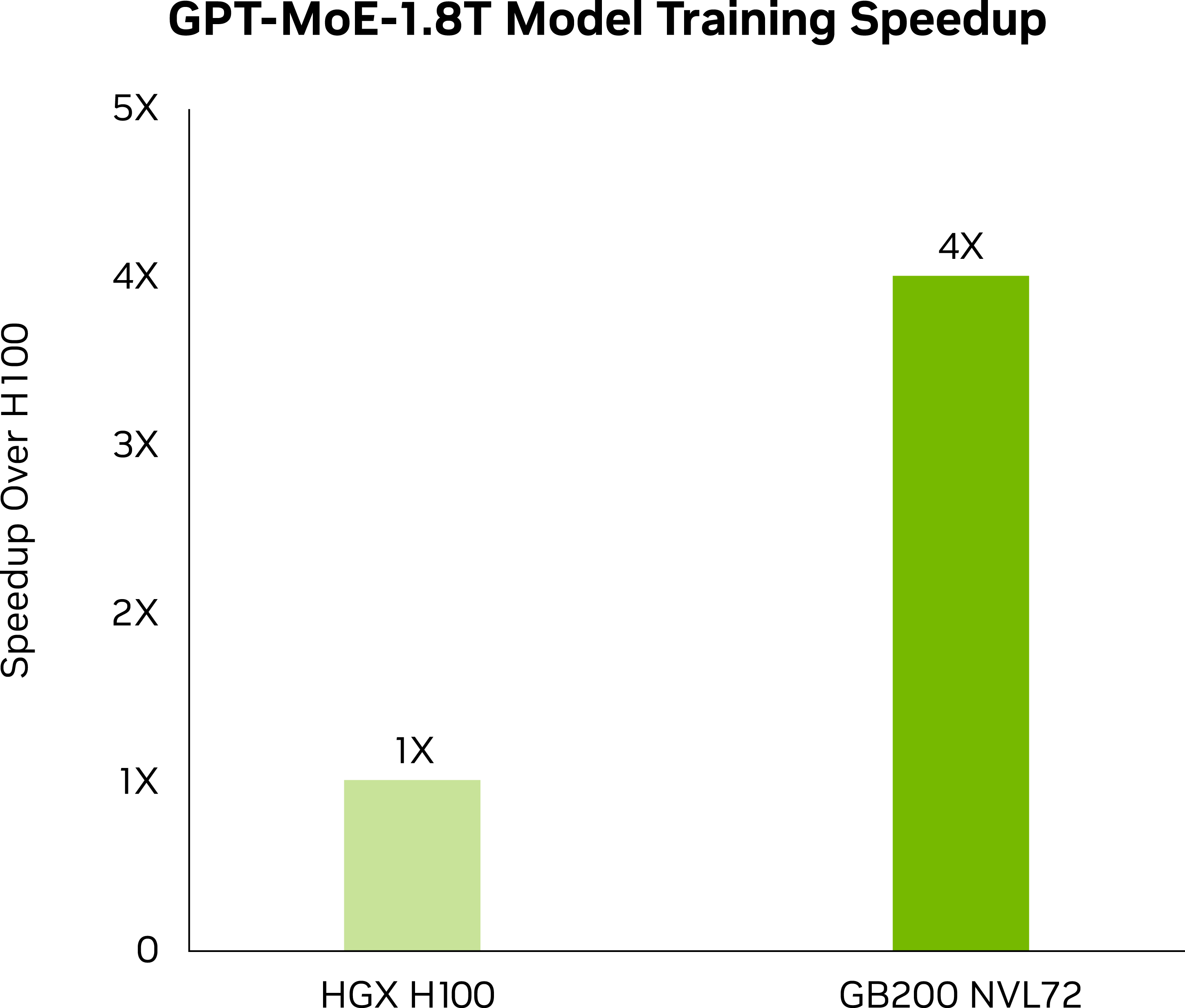

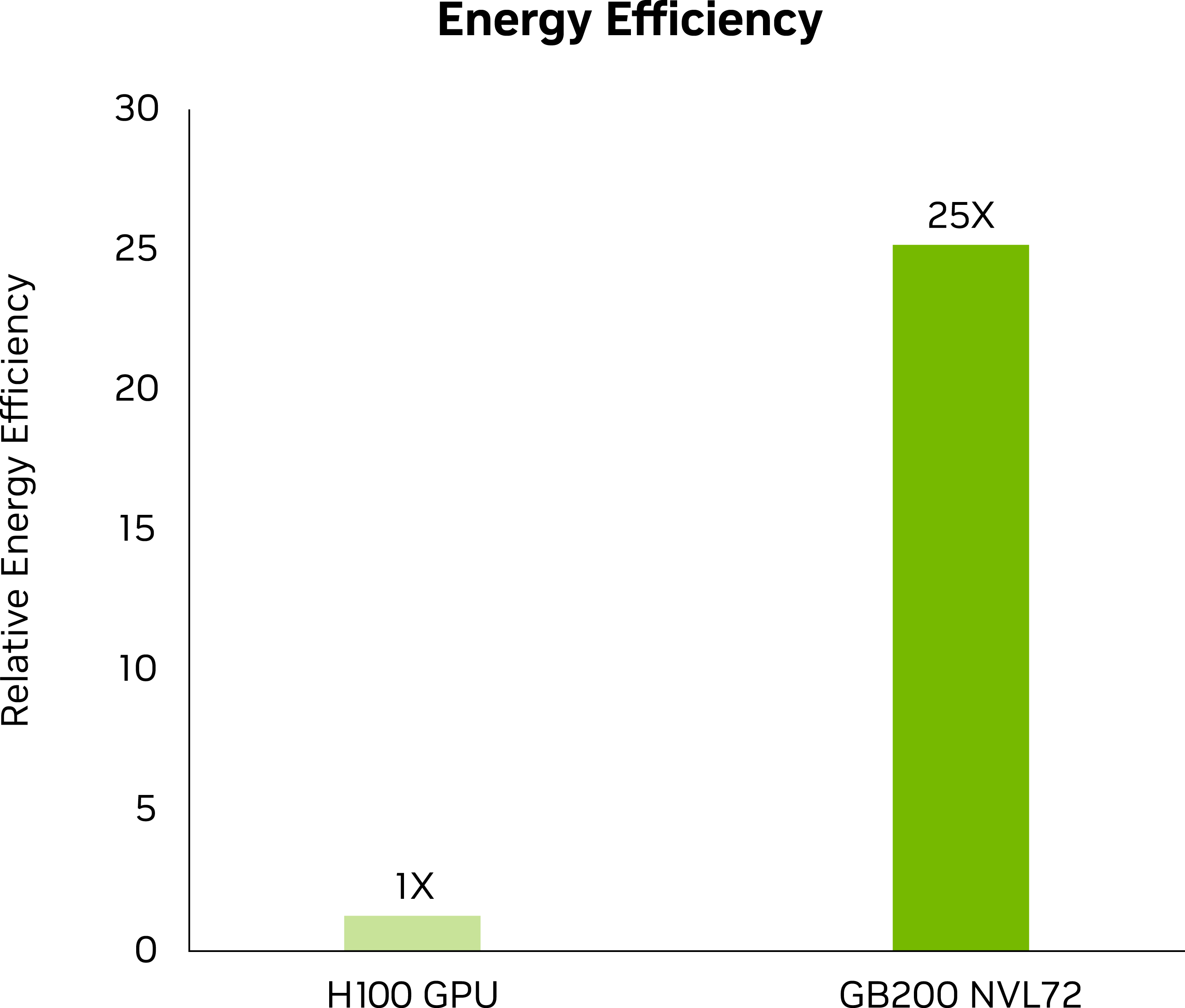

LLM inference and energy efficiency: TTL = 50 milliseconds (ms) real time, FTL = 5s, 32,768 input/1,024 output, NVIDIA HGX™ H100 scaled over InfiniBand (IB) vs. GB200 NVL72, training 1.8T MOE 4096x HGX H100 scaled over IB vs. 456x GB200 NVL72 scaled over IB. Cluster size: 32,768

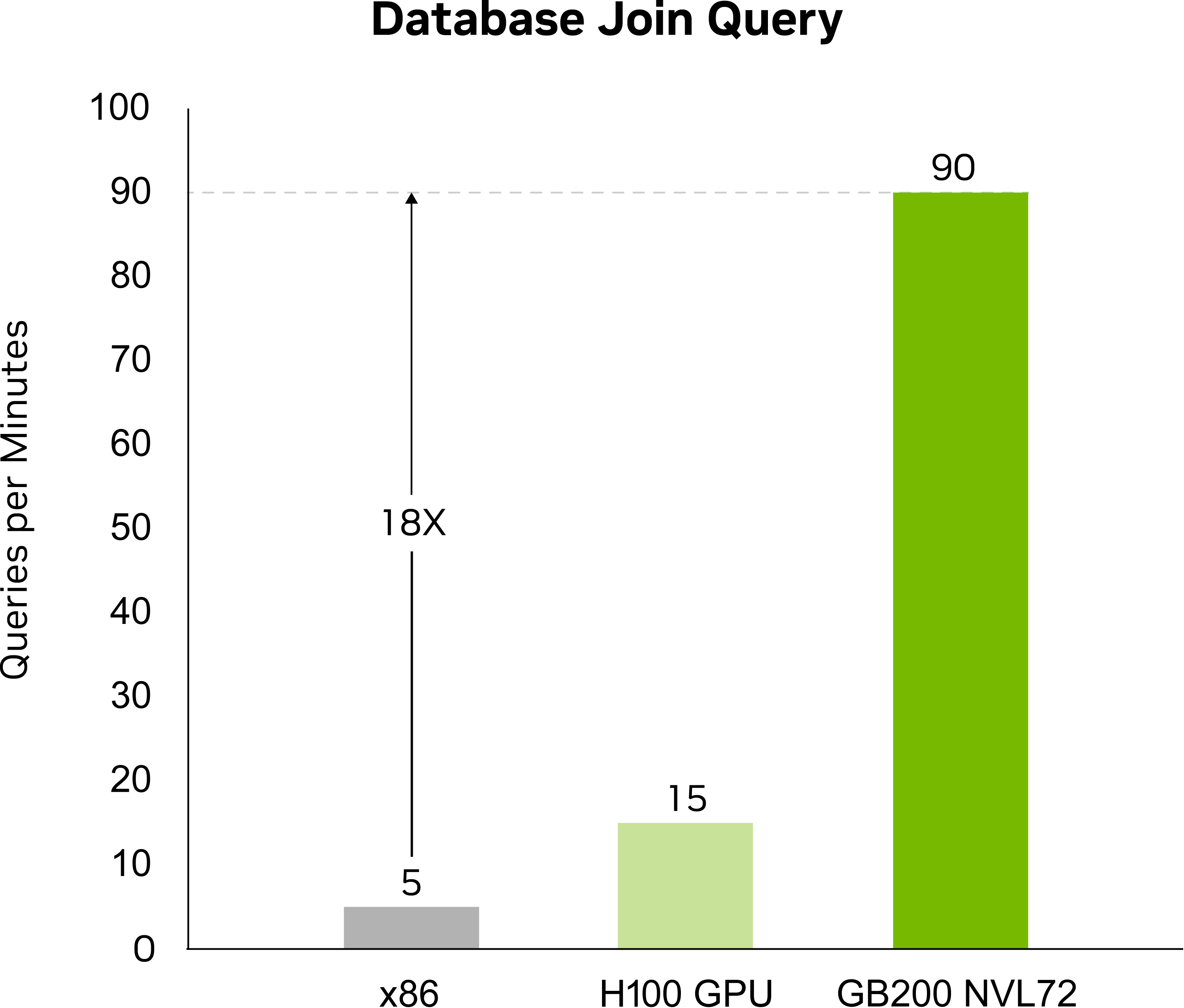

A database join and aggregation workload with Snappy / Deflate compression derived from TPC-H Q4 query. Custom query implementations for x86, H100 single GPU and single GPU from GB200 NLV72 vs. Intel Xeon 8480+

Projected performance subject to change.

Real-Time LLM Inference

Massive-Scale Training

Energy-Efficient Infrastructure

Data Processing

Features

Technological Breakthroughs

Blackwell Architecture

The NVIDIA Blackwell architecture delivers groundbreaking advancements in accelerated computing, powering a new era of computing with unparalleled performance, efficiency, and scale.

NVIDIA Grace CPU

The NVIDIA Grace CPU is a breakthrough processor designed for modern data centers running AI, cloud, and HPC applications. It provides outstanding performance and memory bandwidth with 2X the energy efficiency of today’s leading server processors.

Fifth-Generation NVIDIA NVLink

Unlocking the full potential of exascale computing and trillion-parameter AI models requires swift, seamless communication between every GPU in a server cluster. The fifth-generation of NVLink is a scale–up interconnect that unleashes accelerated performance for trillion- and multi-trillion-parameter AI models.

NVIDIA Networking

The data center’s network plays a crucial role in driving AI advancements and performance, serving as the backbone for distributed AI model training and generative AI performance. NVIDIA Quantum-X800 InfiniBand, NVIDIA Spectrum™-X800 Ethernet, and NVIDIA BlueField®-3 DPUs enable efficient scalability across hundreds and thousands of Blackwell GPUs for optimal application performance.

Specifications

GB200 NVL721 Specs

| GB200 NVL72 | GB200 Grace Blackwell Superchip | |

| Configuration | 36 Grace CPU : 72 Blackwell GPUs | 1 Grace CPU : 2 Blackwell GPU |

| FP4 Tensor Core2 | 1,440 PFLOPS | 40 PFLOPS |

| FP8/FP6 Tensor Core2 | 720 PFLOPS | 20 PFLOPS |

| INT8 Tensor Core2 | 720 POPS | 20 POPS |

| FP16/BF16 Tensor Core2 | 360 PFLOPS | 10 PFLOPS |

| TF32 Tensor Core | 180 PFLOPS | 5 PFLOPS |

| FP32 | 6,480 TFLOPS | 180 TFLOPS |

| FP64 | 3,240 TFLOPS | 90 TFLOPS |

| FP64 Tensor Core | 3,240 TFLOPS | 90 TFLOPS |

| GPU Memory | Bandwidth | Up to 13.5 TB HBM3e | 576 TB/s | Up to 384 GB HBM3e | 16 TB/s |

| NVLink Bandwidth | 130TB/s | 3.6TB/s |

| CPU Core Count | 2,592 Arm® Neoverse V2 cores | 72 Arm Neoverse V2 cores |

| CPU Memory | Bandwidth | Up to 17 TB LPDDR5X | Up to 18.4 TB/s | Up to 480GB LPDDR5X | Up to 512 GB/s |

1. Preliminary specifications. May be subject to change. | ||

MacBook

MacBook iPad

iPad Apple Watch

Apple Watch Airpods

Airpods iMac

iMac Studio Display

Studio Display iphone

iphone

Gaming Laptop

Gaming Laptop

Gaming Desktop

Gaming Desktop

DDR5 Desktop

DDR5 Desktop DDR5 Laptop

DDR5 Laptop DDR5 Server

DDR5 Server DDR4 Desktop

DDR4 Desktop DDR4 Laptop

DDR4 Laptop DDR4 Server

DDR4 Server DDR3 Desktop

DDR3 Desktop DDR3 Laptop

DDR3 Laptop DDR3 Server

DDR3 Server

Intel Socket

Intel Socket Intel Z890

Intel Z890 Intel B860

Intel B860 Intel B760

Intel B760 Intel H770

Intel H770 Intel B660

Intel B660 Intel H670

Intel H670 Intel H610

Intel H610 Intel Z690

Intel Z690 Intel H510

Intel H510 Intel Z590

Intel Z590 Intel B560

Intel B560 Intel H470

Intel H470 Intel Z490

Intel Z490 Intel H410

Intel H410 Intel B460

Intel B460 Intel H310

Intel H310 Intel B360

Intel B360 Intel B365

Intel B365 Intel X299

Intel X299 Intel Z390

Intel Z390 Intel Z370

Intel Z370 Intel H370

Intel H370 Intel Z270 H270

Intel Z270 H270 Intel B250

Intel B250 Intel Z170 H170

Intel Z170 H170 Intel H110

Intel H110 Intel H81

Intel H81 Intel B85

Intel B85 Intel H61

Intel H61 Intel B150

Intel B150 AMD Socket

AMD Socket AMD B850

AMD B850 AMD B840

AMD B840 AMD TRX50

AMD TRX50 AMD A620

AMD A620 AMD X870

AMD X870 AMD B650

AMD B650 AMD A520

AMD A520 AMD TRX40

AMD TRX40 AMD B550

AMD B550 AMD X570

AMD X570 AMD X470

AMD X470 AMD B450

AMD B450 AMD X370

AMD X370 AMD A320

AMD A320 AMD B350

AMD B350 AMD X399

AMD X399 AMD A88

AMD A88 AMD A68 A78

AMD A68 A78

Cpu Air Coolers

Cpu Air Coolers CPU Liquid Coolers

CPU Liquid Coolers Fans

Fans

AMD CPUs Desktop

AMD CPUs Desktop AMD Server CPU

AMD Server CPU Intel Server CPU

Intel Server CPU Samsung CPUs

Samsung CPUs Other special CPUs

Other special CPUs

Solid State Drives

Solid State Drives NVMe PCIe M.2

NVMe PCIe M.2 SATA 2.5inch

SATA 2.5inch Hard Disk Drive

Hard Disk Drive Server Hard Drives

Server Hard Drives NAS hard drive

NAS hard drive Monitoring hard drive

Monitoring hard drive Portable Solid State Drives

Portable Solid State Drives Memory Cards

Memory Cards USB Flash Drives

USB Flash Drives

Nvidia GPU

Nvidia GPU RTX 50 series

RTX 50 series RTX 30 series

RTX 30 series GTX 16 series

GTX 16 series GTX 10 series

GTX 10 series RX 9000 series

RX 9000 series RX 7000 series

RX 7000 series RX 6000 series

RX 6000 series RX 5000 series

RX 5000 series RX 500 series

RX 500 series RTX 20 series

RTX 20 series

Rack server

Rack server Blade server

Blade server Tower server

Tower server Storage Server Solutions

Storage Server Solutions Network switch

Network switch

Workstation

Workstation Mobile Workstation

Mobile Workstation

Server motherboard

Server motherboard Workstation Motherboard

Workstation Motherboard

PSP3000索尼原装街机掌机-150x150.jpg) SONY Gaming Console

SONY Gaming Console ASUS Gaming Console

ASUS Gaming ConsoleLegion-Go-游戏掌机手持设备-150x150.jpg) Lenovo Gaming Console

Lenovo Gaming Console One XPlayer

One XPlayer日版-Xbox-Series-X-XSX次世代-150x150.jpg) Microsoft Gaming Console

Microsoft Gaming Console XBOX Gaming Console

XBOX Gaming ConsoleMSI-Claw-A1M-050US-游戏掌机-150x150.jpg) MSI Gaming Console

MSI Gaming Console

Motherboard

Motherboard GTX TITAN

GTX TITAN Computer Cases

Computer Cases